website-content-crawler

Pricing

Pay per usage

website-content-crawler

Pricing

Pay per usage

Rating

0.0

(0)

Developer

Matěj Šesták

Actor stats

1

Bookmarked

2

Total users

2

Monthly active users

3 years ago

Last modified

Categories

Share

Website Content Crawler is an Apify Actor that can perform a deep crawl of one or more websites to extract their content, such as documentation, knowledge bases, help articles, blog posts, or any other text content.

The actor was specifically designed to extract data for feeding, fine-tuning, or training large language models (LLMs) such as GPT-4, ChatGPT or LLaMA, and other AI models. It automatically removes headers, footers, menus, ads, and other noise from the web pages in order to return only the text content that can be directly fed to the models.

The actor has a simple input configuration so that it can be easily integrated into customer-facing products, where customers can enter just a URL of their website that want to have indexed by LLMs. The actor scales gracefully and can be used for small sites as well as sites with millions of pages. You can retrieve the results using API to formats such as JSON or CSV, which can be fed directly to your LLM, vector database, or directly to ChatGPT.

How does it work?

Website Content Crawler only needs one or more start URLs, typically the top-level URL of the documentation site, blog, or knowledge base that you want to scrape. The actor crawls the start URLs, finds links to other pages, and recursively crawls those pages too, as long as their URL is under the start URL.

For example, if you enter the start URL https://example.com/blog/, the

actor will crawl pages like https://example.com/blog/article-1 or https://example.com/blog/section/article-2,

but will skip pages like https://example.com/docs/something-else.

The actor also extracts important metadata about the content, such as author, language, publishing date, etc. It can save also the full HTML and screenshots of the pages, which is useful for debugging.

Website Content Crawler can be further configured for optimal performance. For example, you can select the crawler type:

- Headless web browser (default) - Useful for modern websites with anti-scraping protections and JavaScript rendering. It recognizes common blocking patterns like CAPTCHAs and automatically retries blocked requests through new sessions. However, running web browsers is more expensive as it requires more computing resources and is slower.

- Raw HTTP client - High-performance crawling mode that uses raw HTTP requests to fetch the pages. It is faster and cheaper, but it might not work on all websites.

- Raw HTTP client with JS execution (JSDOM) (experimental) - A compromise between a browser and raw HTTP crawlers. Good performance and should work on almost all websites including those with dynamic content. However, it is still experimental and might sometimes crash so we don't recommend it in production settings yet.

You can also set additional input parameters such as a maximum number of pages, maximum crawling depth, maximum concurrency, proxy configuration, timeout, etc. to control the behavior and performance of the actor.

Designed for generative AI and LLMs

The results of the Website Content Crawler can help you feed, fine-tune or train your large language models (LLMs) or provide context for prompts for ChatGPT. In return, the model will answer questions based on your or your customer's websites and content.

Custom chatbots for customer support

Chatbots personalized on customer data such as documentation or knowledge bases are the next big thing for customer support and success teams. Let your customers simply type in the URL of their documentation or help center, and in minutes, your chatbot will have full knowledge about their product with zero integration costs.

Generate personalized content based on customer’s copy

ChatGPT and LLMs can write articles for you, but they won’t sound like you wrote them. Feed all your old blogs into your model to make it sound like you. Or train the model on your customers’ blogs and have it write in their tone of voice. Or help their technical writers with making first drafts of new documentation pages.

Summarization, translation, proofreading at scale

Got some old docs or blogs that need to be improved? Use Website Content Crawler to scrape the content, feed it to ChatGPT API and ask it to summarize, proofread, translate or change the style of the content.

Example

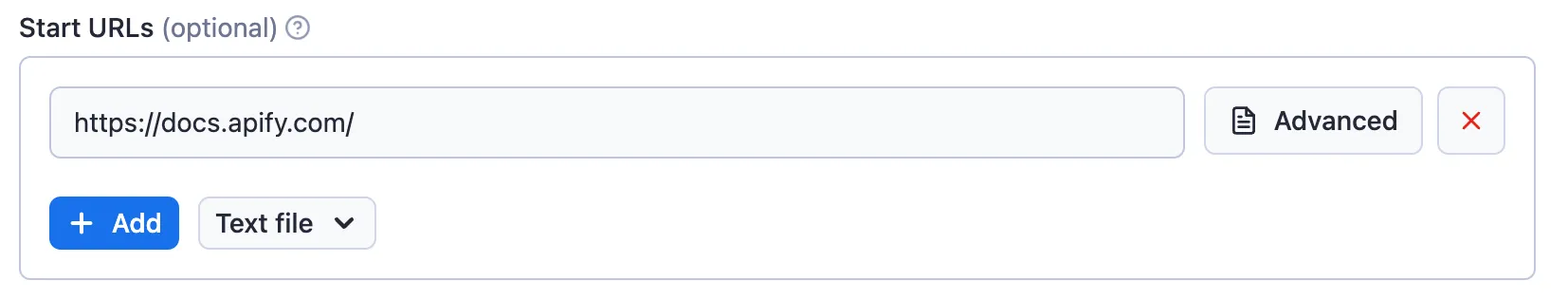

This example shows how to scrape all pages from the Apify documentation at https://docs.apify.com/:

Input

See full input with description.

Output

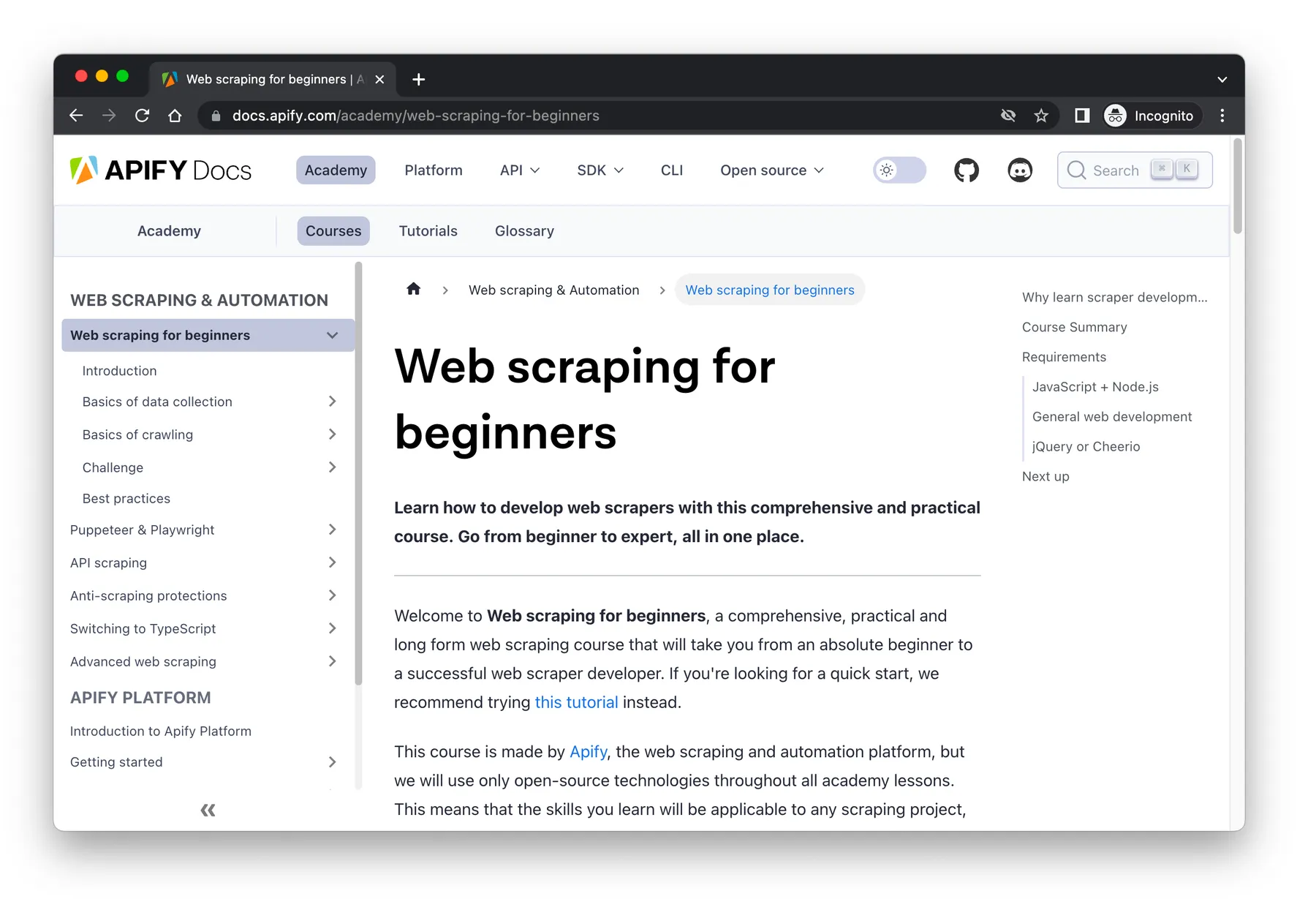

This is how one crawled page (https://docs.apify.com/academy/web-scraping-for-beginners) looks in a browser:

And here is how the crawling result looks in JSON format (note that other formats like CSV or Excel are also supported).

The main page content can be found in the text field, and it only contains the valuable

content, without menus and other noise:

LangChain integration

LangChain is the most popular framework for developing applications powered by language models. It provides an integration for Apify, so that you can feed Actor results directly to LangChain’s vector databases, enabling you to easily create ChatGPT-like query interfaces to websites with documentation, knowledge base, blog, etc.

Example

First, install LangChain with common LLMs and Apify API client for Python:

And then create a ChatGPT-powered answering machine:

The query produces an answer like this:

Apify is a platform for developing, running, and sharing serverless cloud programs. It enables users to create web scraping and automation tools and publish them on the Apify platform.

https://docs.apify.com/platform/actors, https://docs.apify.com/platform/actors/running/actors-in-store, https://docs.apify.com/platform/security, https://docs.apify.com/platform/actors/examples

For details and Jupyter notebook, see Apify integration for LangChain.

How much does Website Content Crawler cost?

You pay only for the Apify platform usage required by the Actor to crawl the websites and extract the content. The exact price depends on the crawler type and settings, website complexity, network speed, and random circumstances.

The main cost driver of Website Content Crawler is the actor compute units (CU), where 1 CU corresponds to an actor with 1 GB of memory running for 1 hour. With the baseline price of $0.25/CU, from our tests, the actor usage costs approximately:

- $0.5 - $5 per 1,000 web pages with a headless browser, depending on the website

- $0.2 per 1,000 web pages with raw HTTP crawler

Note that the Apify Free plan gives you $5 free credits every month and access to Apify Proxy, which is sufficient for testing and low-volume use cases.

Troubleshooting

- If the extracted text doesn’t contain the expected page content, try to select another Crawler type. Generally, a headless browser will extract more text as it loads dynamic page content and is less likely to be blocked.

- If the extracted text has more than expected page content (e.g. navigation or footer), try to select another Text extractor, or use the Remove HTML elements setting to skip unwanted parts of the page.

Known limitations and development roadmap

Website Content Crawler is under active development. Here are some things that we're currently working on:

- Support other files such as PDF, TXT, DOCX, PPTX, or MD

- Support for language selection

- Integration with ChatGPT Retrieval Plugin to automatically update the vector database

- Other crawler types and automatic selection

- Support for website authentication

If you’re interested in these or other features, please get in touch at ai@apify.com or submit an issue.

Is it legal to scrape content?

Web scraping is generally legal if you scrape publicly available non-personal data. What you do with the data is another question. Documentation, help articles, or blogs are typically protected by copyright, so you can't republish the content without permission. However, to scrape your own or your customers’ documentation or blogs, you can easily get their consent, which can be given simply by accepting your terms of service. If you want to learn more about the legality of web scraping, read our detailed blog post.

Changelog

0.3.6 (2023-05-04)

- Input:

- Made the

initialConcurrencyoption visible in the input editor. - Added

aggressivePruningoption. With this option set totrue, the crawler will try to deduplicate the scraped content. This can be useful when the crawler is scraping a website with a lot of duplicate content (header menus, footers, etc.)

- Made the

- Behavior:

- The actor now stays alive and restarts the crawl on certain known errors (Playwright Assertion Error).

0.3.4 (2023-05-04)

- Input:

- Added a new hidden option

initialConcurrency. This option sets the initial number of web browsers or HTTP clients running in parallel during the actor run. Increasing this number can speed up the crawling process. Bear in mind this option is hidden and can be changed only by editing the actor input using the JSON editor.

- Added a new hidden option

0.3.3 (2023-04-28)

- Input:

- Added a new option

maxResultsto limit the total number of results. If used withmaxCrawlPages, the crawler will stop when either of the limits is reached.

- Added a new option

0.3.1 (2023-04-24)

- Input:

- Added an option to download linked document files from the page -

saveFiles. This is useful for downloading pdf, docx, xslx... files from the crawled pages. The files are saved to the default key-value store of the run and the links to the files are added to the dataset. - Added a new crawler - Stealthy web browser - that uses a Firefox browser with a stealthy profile. It is useful for crawling websites that block scraping.

- Added an option to download linked document files from the page -

0.0.13 (2023-04-18)

- Input:

- Added new

textExtractoroptionreadableText. It is generally very accurate and has a good ratio of coverage to noise. It extracts only the main article body (similar tounfluff) but can work for more complex pages. - Added

readableTextCharThresholdoption. This only applies toreadableTextextractor. It allows fine-tuning which part of the text should be focused on. That only matters for very complex pages where it is not obvious what should be extracted.

- Added new

- Output:

- Added simplified output view

Overviewthat has onlyurlandtextfor quick output check

- Added simplified output view

- Behavior:

- Domains starting with

www.are now considered equal to ones without it. This means that the start URLhttps://apify.comcan enqueuehttps://www.apify.comand vice versa.

- Domains starting with

0.0.10 (2023-04-05)

- Input:

- Added new

crawlerTypeoptionjsdomfor processing with JSDOM. It allows client-side script processing, trying to mimic the browser behavior in Node.js but with much better performance. This is still experimental and may crash on some particular pages. - Added

dynamicContentWaitSecsoption (defaults to 10s), which is the maximum waiting time for dynamic waiting.

- Added new

- Output (BREAKING CHANGE):

- Renamed

crawl.datetocrawl.loadedTime - Moved

crawl.screenshotUrlto top-level object - The

markdownfield was made visible - Renamed

metadata.languagetometadata.languageCode - Removed

metadata.createdAt(for now) - Added

metadata.keywords

- Renamed

- Behavior:

- Added waiting for dynamically rendered content (supported in Headless browser and JSDOM crawlers). The crawler checks every half a second for content changes. When there are no changes for 2 seconds, the crawler proceeds to extraction.

0.0.7 (2023-03-30)

- Input:

- BREAKING CHANGE: Added

textExtractorinput option to choose how strictly to parse the content. Swapped the previousunfluffforCrawleeHtmlToTextas default which in general will extract more text. We chose to output more text rather than less by default. - Added

removeElementsCssSelectorwhich allows passing extra CSS selectors to further strip down the HTML before it is converted to text. This can help fine-tuning. By default, the actor removes the page navigation bar, header, and footer.

- BREAKING CHANGE: Added

- Output:

- Added markdown to output if

saveMarkdownoption is chosen - All extractor outputs + HTML as a link can be obtained if

debugModeis set. - Added

pageTypeto the output (only asdebugfor now), it will be fine-tuned in the future.

- Added markdown to output if

- Behavior:

- Added deduplication by

canonicalUrl. E.g. if more different URLs point to the same canonical URL, they are skipped - Skip pages that redirect outside the original start URLs domain.

- Only run a single text extractor unless in debug mode. This improves performance.

- Added deduplication by